Import from Bitwarden

You can import Bitwarden JSON files into Passwork via API.

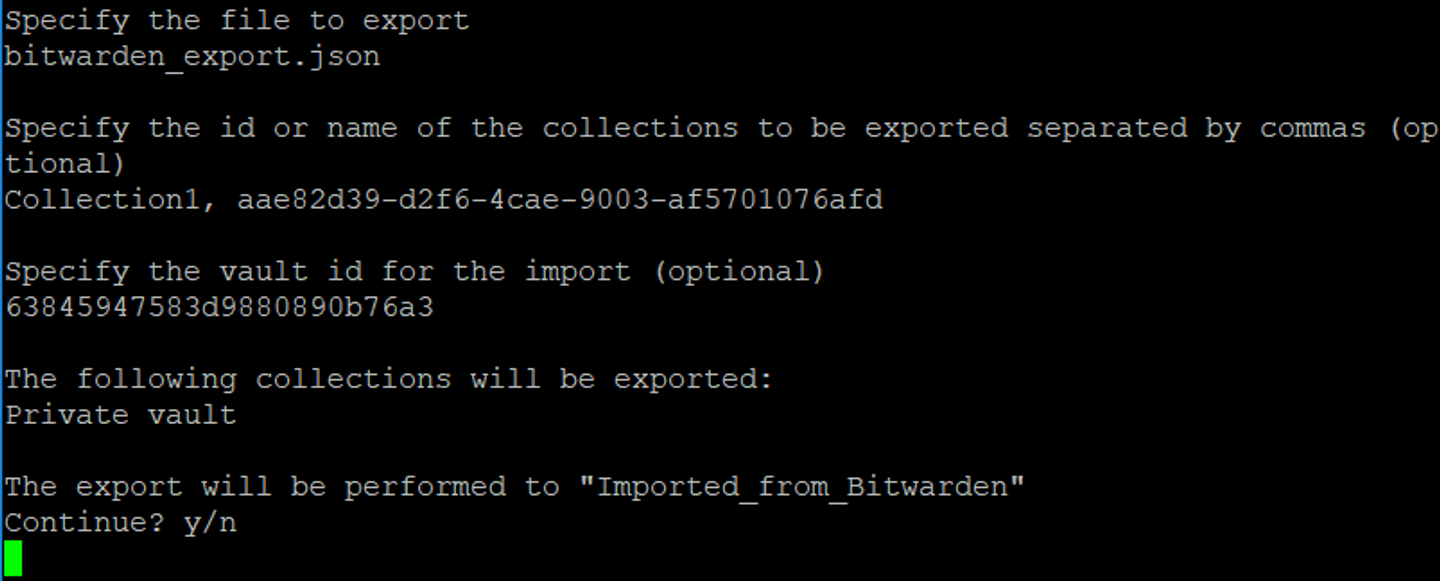

Run import.js script and follow the instructions.

info

TOTP codes must be valid, otherwise the script will terminate with an error

Configure and run (DEB architecture)

- Get root privileges and update the local package database:

sudo -i

apt-get update

- Install Node.js and npm:

apt install nodejs npm -y

warning

Node.js version must be 17 or higher

- Check the installed version:

node -v

- Install modules for importing:

npm install dotenv readline fs util passwork-js

- Create the import script — import.js

Source code of import.js:

require("util").inspect.defaultOptions.depth = null;

const env = require('dotenv').config().parsed;

const readline = require('readline');

const fs = require('fs');

const Passwork = require('./node_modules/passwork-js/src/passwork-api');

/** @type PassworkAPI */

const passwork = new Passwork(env.HOST);

function throwFatalError(message, error) {

console.error(message);

console.error(error);

process.exit(0);

}

(async () => {

try {

const [argFileName, argCollections, argPath] = process.argv.slice(2);

let jsonFileName;

let jsonData;

let collectionsToImport = [];

let importVault;

// Authorize

try {

await passwork.login(env.API_KEY, env.USER_MASTER_PASS);

} catch (e) {

throwFatalError('Failed to authorise', e);

}

const rl = readline.createInterface({

input: process.stdin,

output: process.stdout

});

// Read json from bitwarden

const answerFileName = await new Promise(resolve => {

rl.question('\nSpecify the file to export\n', resolve)

});

jsonFileName = answerFileName ? answerFileName : argFileName;

try {

jsonData = JSON.parse(fs.readFileSync(jsonFileName));

if (!jsonData || !jsonData.hasOwnProperty('items')) {

throw 'Invalid json file format';

}

} catch (e) {

throwFatalError('Failed to read json file', e);

}

// Specify collections to import

const answerCollections = await new Promise(resolve => {

rl.question('\nSpecify comma separated id or name of collections to be exported (optional)\n', resolve)

});

let collections = answerCollections ? answerCollections : argCollections;

if (collections) {

collections = collections.split(',').map(c => c.trim()).filter((c) => c);

} else {

collections = [];

}

if (jsonData.collections && jsonData.collections.length) {

if (collections.length === 0) {

collectionsToImport = jsonData.collections;

} else {

jsonData.collections.forEach(c => {

if (collections.includes(c.name) || collections.includes(c.id)) {

collectionsToImport.push(c);

}

});

}

} else {

collectionsToImport = [];

}

collectionsToImport = [...new Set(collectionsToImport)];

// Specify vault id for import

const answerPath = await new Promise(resolve => {

rl.question('\nSpecify the id of the vault to import (optional) \n', resolve)

});

let path = answerPath ? answerPath : argPath;

if (path) {

importVault = await passwork.getVault(path);

if (!importVault) {

throwFatalError('The vault specified for import was not found');

}

}

// Confirm import

let confirmMessage = '\nThe following collections will be exported:\n';

if (jsonData.collections) {

collectionsToImport.forEach(c => {

confirmMessage += `${c.name} (${c.id})\n`;

});

} else {

confirmMessage += 'Private vault\n';

}

if (importVault) {

confirmMessage += `\nExports will be made to "${importVault.name}"\n`;

}

confirmMessage += 'To be continued? y/n\n';

const answerConfirm = await new Promise(resolve => {

rl.question(confirmMessage, resolve)

});

if (answerConfirm.toLowerCase() === 'y') {

rl.close();

importPasswords().then(() => process.exit(0)).catch((e) => {

throwFatalError('error', e);

});

} else {

console.log('The operation has been cancelled');

process.exit(0);

}

async function importPasswords() {

const logFileName = 'import-' + new Date().getTime() + '.log';

function logMessage(message) {

let msg = new Date().toISOString() + ' ' + message + '\n';

fs.appendFileSync(logFileName, msg);

console.log(msg);

}

function preparePasswordFields(data, directories) {

const vaultsNames = getDirectoriesNames(directories);

if (data.type !== 1 && data.type !== 2) {

logMessage(`Object type ${data.type}, ${data.name}`

+ ` from collections ${vaultsNames} has not been imported`);

return;

}

const fields = {

password: '',

name: data.name,

description: data.notes,

custom: [],

};

if (directories.length > 1) {

fields.description = fields.description ? (fields.description + '\n') : '';

fields.description += `A copy of the password can be found in: ${vaultsNames}`;

}

if (data.login) {

if (data.login.username) {

fields.login = data.login.username;

}

if (data.login.password) {

fields.password = data.login.password;

}

if (data.login.totp) {

fields.custom.push({

name: 'TOTP',

value: data.login.totp,

type: 'totp'

});

}

if (data.login.uris) {

fields.url = data.login.uris.length === 1

? data.login.uris[0].uri : data.login.uris.reduce((a, b) => (a.uri || a) + ", " + b.uri, '')

}

}

if (data.fields) {

data.fields.forEach((field) => {

if (field.type === 0 || field.type === 2) {

fields.custom.push({

name: String(field.name),

value: String(field.value),

type: 'text'

});

} else if (field.type === 1) {

fields.custom.push({

name: String(field.name),

value: String(field.value),

type: 'password'

});

} else {

logMessage(`Field of type "link" of the object ${data.name}`

+ ` from collections ${vaultsNames} has not been imported`);

}

});

}

return fields;

}

function getDirectories(passwordCollectionIds, collections) {

const directories = [];

for (const collectionId of passwordCollectionIds) {

if (collections.hasOwnProperty(collectionId)) {

directories.push(collections[collectionId]);

}

}

return directories;

}

function getDirectoriesNames(directories) {

return directories.length > 1

? directories.reduce((a, b) => (a.name || a) + ", " + b.name)

: directories[0].name;

}

logMessage('Import from file ' + jsonFileName);

if (collectionsToImport.length) {

if (importVault) {

// Collections as folders

const folders = {};

for (let c = 0; c < collectionsToImport.length; c++) {

const item = collectionsToImport[c];

folders[item.id] = await passwork.addFolder(importVault.id, item.name);

logMessage(`A folder has been created ${folders[item.id].name} based on the collection ${item.id}`)

}

for (let p = 0; p < jsonData.items.length; p++) {

const passwordData = jsonData.items[p];

const foldersList = getDirectories(passwordData.collectionIds, folders);

if (foldersList.length === 0) {

continue;

}

logMessage(`Imports started ${passwordData.name}`);

let fields = preparePasswordFields(passwordData, foldersList);

if (!fields) {

continue;

}

fields.vaultId = importVault.id;

for (const folder of foldersList) {

fields.folderId = folder.id;

await passwork.addPassword(Object.assign({}, fields));

logMessage(`Importation completed ${passwordData.name}`);

}

}

} else {

// Collections as vaults

const vaults = [];

for (let c = 0; c < collectionsToImport.length; c++) {

const item = collectionsToImport[c];

const vaultId = await passwork.addVault(item.name);

vaults[item.id] = await passwork.getVault(vaultId);

logMessage(`The vault has been created ${vaults[item.id].name} based on the collection ${item.id}`)

}

for (let p = 0; p < jsonData.items.length; p++) {

const passwordData = jsonData.items[p];

const vaultsList = getDirectories(passwordData.collectionIds, vaults);

if (vaultsList.length === 0) {

continue;

}

logMessage(`Imports started ${passwordData.name}`);

let fields = preparePasswordFields(passwordData, vaultsList);

if (!fields) {

continue;

}

for (const vault of vaultsList) {

fields.vaultId = vault.id;

await passwork.addPassword(Object.assign({}, fields));

logMessage(`Importation completed ${passwordData.name}`);

}

}

}

logMessage(`Import completed`);

process.exit(0);

return;

}

if (collectionsToImport.length === 0 && jsonData.items[0].organizationId === null) {

// Private vault import

if (!importVault) {

const vaultId = await passwork.addVault('Private vault', true);

importVault = await passwork.getVault(vaultId);

logMessage(`Vault ${importVault.name} was created `);

}

const folders = {};

if (jsonData.folders) {

for (const folder of jsonData.folders) {

folders[folder.id] = await passwork.addFolder(importVault.id, folder.name);

}

}

for (let p = 0; p < jsonData.items.length; p++) {

const passwordData = jsonData.items[p];

logMessage(`Imports started ${passwordData.name}`);

let fields = preparePasswordFields(passwordData, [importVault]);

if (!fields) {

continue;

}

fields.vaultId = importVault.id;

if (passwordData.folderId) {

fields.folderId = folders[passwordData.folderId].id;

}

await passwork.addPassword(Object.assign({}, fields));

logMessage(`Import completed ${passwordData.name}`);

}

logMessage(`Import completed`);

process.exit(0);

return;

}

logMessage(`Import format could not be determined`);

process.exit(0);

}

} catch (e) {

throwFatalError('error', e);

}

})();

- Create an .env file and specify the Passwork host, the user's API key and its master password:

HOST='https://your_host/api/v4'

API_KEY=

USER_MASTER_PASS=

- Load the Bitwarden XML file and run the script. The script will ask for the name of the file:

node import.js

- You can also pass these parameters as arguments to the script:

node import.js bitwarden_export_org.json "Collection 1"